Zhang, Binjie (张斌杰)

Ph.D. Candidate, Computer Science

Show Lab, National University of Singapore (NUS)

E-mail: binjie97[AT]u.nus.edu

Biography

I am a Ph.D. candidate in Computer Science at the National University of Singapore (NUS), advised by Assistant Prof. Mike Zheng Shou in Show Lab. My research interests lie in video understanding, vision-language models, and world models, with a focus on deployable and compatible visual representations.

Before joining NUS, I received my M.Eng. in Computer Science and Technology from Tsinghua University, supervised by Prof. Chun Yuan, and my B.Eng. in Information Engineering from East China University of Science and Technology (ECUST), where I ranked 1 / 92.

I spent several years as an AI research intern at Tencent ARC Lab (Shenzhen), working on:

Compatible representation learning and model upgrades for large-scale image/video retrieval systems.

Cross-modality video understanding, including video-text retrieval and temporal grounding.

Broadly, my research aims to make visual and multimodal models easier to upgrade, reuse, and deploy in real systems, while enabling embodied and egocentric agents to anticipate both actions and visual futures.

News

[2026] Submitted GR-MCT (tool-using agents with multi-modal CoT) and PRCFC (lifelong imitation learning) to CVPR 2026 as first author.

[2025] Ego-centric Predictive Model Conditioned on Hand Trajectories submitted to ICLR 2025.

[2023] Darwinian Model Upgrades accepted to AAAI 2023.

[2022] Towards Universal Backward-Compatible Representation Learning accepted to IJCAI 2022 (long oral).

[2022] Hot-Refresh Model Upgrades accepted to ICLR 2022, and received the Tencent Technology Breakthrough Award and SZCCF Science and Technology Award.

Selected Publications

(* indicates first author or core contribution)

-

GR-MCT: Group-Relative Reasoning with Multi-Modal CoT for Tool-Using Agents*

CVPR, under submission, 2026. -

PRCFC: Lifelong Imitation Learning via Prototype Replay and Coarse-to-Fine Compatibility*

CVPR, under submission, 2026. -

Ego-centric Predictive Model Conditioned on Hand Trajectories*

ICLR, under review, 2025.

[Paper] -

TaCA: Upgrading Your Visual Foundation Model with a Task-Agnostic Compatible Adapter*

Binjie Zhang, Yixiao Ge, Xuyuan Xu,, Mike Zheng Shou

arXiv, 2023.

[Paper] -

Darwinian Model Upgrades: Model Evolving with Selective Compatibility*

Binjie Zhang, Yixiao Ge, Xuyuan Xu, Chun Yuan

AAAI, 2023.

[Paper] -

Towards Universal Backward-Compatible Representation Learning*

Binjie Zhang, Yixiao Ge, Yantao Shen, Yu Li, Chun Yuan

IJCAI (long oral), 2022.

[Paper] -

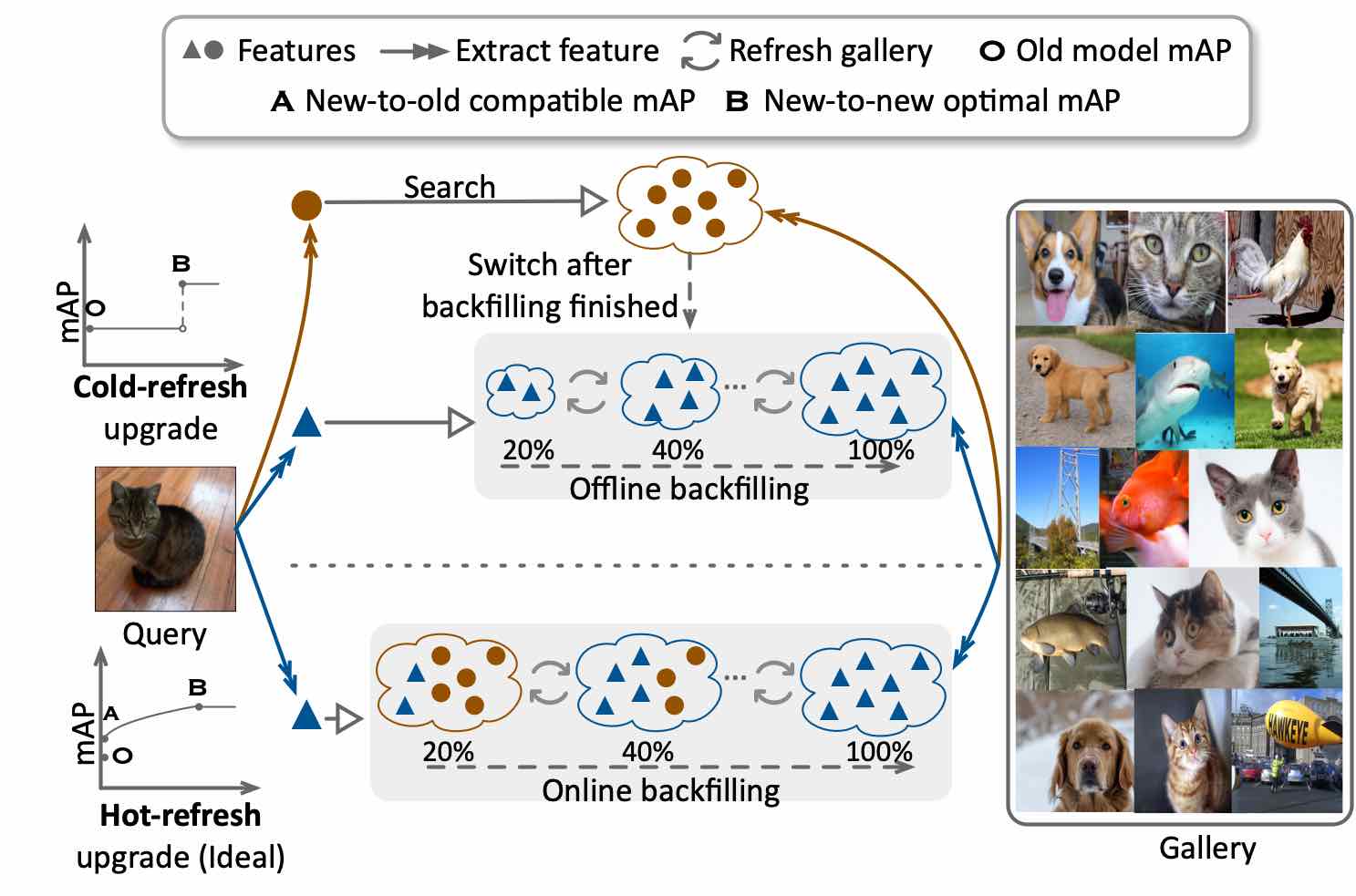

Hot-Refresh Model Upgrades with Regression-Alleviating Compatible Training in Image Retrieval*

Binjie Zhang, Yixiao Ge, Yantao Shen, Shupeng Su, Chun Yuan, Ying Shan

ICLR, 2022.

[Paper]

A more complete and up-to-date list can be found on my Google Scholar.

Projects

|

Robot Lifelong Learning (PRCFC) |

|

Ego-Centric Predictive Model |

|

Task-Agnostic Compatible Adapter (TaCA) |

|

Hot-Refresh Model Upgrades |

Research Experience

-

Ph.D. Candidate, Show Lab @ NUS

Jan. 2023 – Present, Singapore

Advisor: Mike Zheng Shou

Research on video understanding, vision-language models, world models, and compatible visual representations. -

AI Research Intern, Tencent ARC Lab – Compatible Representation Learning Group

2020 – 2023, Shenzhen, China

Advisors: Yixiao Ge, Yantao Shen

Worked on backward-compatible representation learning and model upgrades for large-scale image and video retrieval systems, including multi-GPU PyTorch pipelines and hot-refresh deployment strategies. -

AI Research Intern, Tencent ARC Lab – Cross-Modality Video Understanding Group

2019 – 2020, Shenzhen, China

Advisors: Yu Li, Ying Shan

Developed multimodal models for video-text retrieval and temporal grounding on large-scale video-language datasets.

Education

-

National University of Singapore (NUS)

Ph.D. in Computer Science, Jan. 2023 – Jan. 2027 (expected), Singapore

Advisor: Mike Zheng Shou -

Tsinghua University (THU)

M.Eng. in Computer Science and Technology, Aug. 2019 – Jul. 2022, Beijing, China

Advisor: Chun Yuan

Research focus: compatible representation learning; cross-modal video understanding. -

East China University of Science and Technology (ECUST)

B.Eng. in Information Engineering, Aug. 2015 – Jul. 2019, Shanghai, China

Cumulative GPA: 3.77 / 4.00, Ranking: 1 / 92.

Selected Awards

Tencent Technology Breakthrough Award – Hot-Refresh Model Upgrades, 2022.

SZCCF Science and Technology Award – Efficient Model Upgrades, 2022.

Excellent Master Degree Graduate in Beijing & Outstanding Master's Graduation Thesis, 2022.

Annual College Personage Award (highest student honor in ECUST), 2018.

National Scholarship for Undergraduates (twice), Ministry of Education of China, 2016 & 2017.

Academic Service

Reviewer: ECCV, ICCV, AAAI, IJCAI, CVPR, ICLR

© Binjie Zhang · Last updated: Nov. 2025